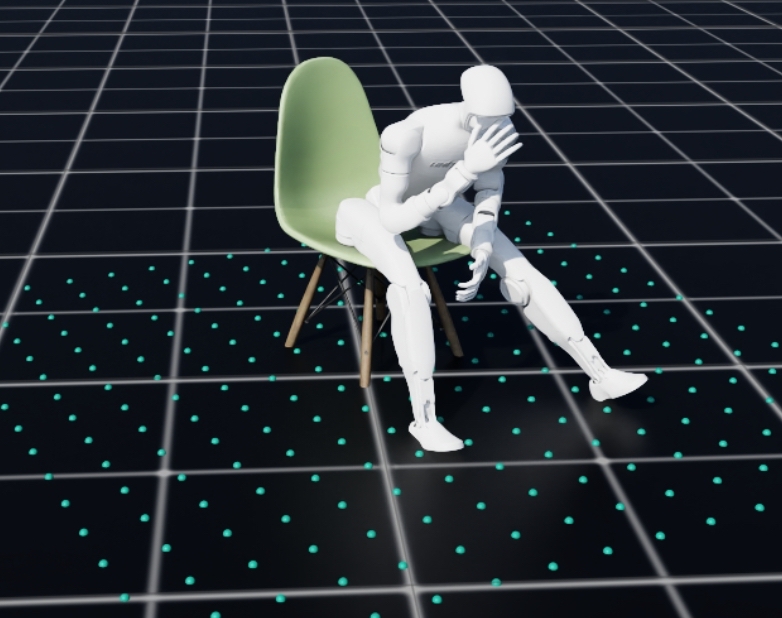

Intelligent robots are advancing rapidly, with embodied agents increasingly expected to work and live alongside humans in households, factories, hospitals, schools, etc. For these agents to operate safely, socially, and intelligently, they must effectively interact with humans and adapt to changing environments. Moreover, such interactions can transform human behavior and even reshape the environment—for example, through adjustments in human motion during robot-assisted handovers or the redesign of objects for improved robotic grasping. Beyond established research in human-human and human-scene interactions, vast opportunities remain in exploring human-robot-scene collaboration. This workshop will explore the integration of embodied agents into dynamic human-robot-scene interactions. Our focus is on, but not limited to:

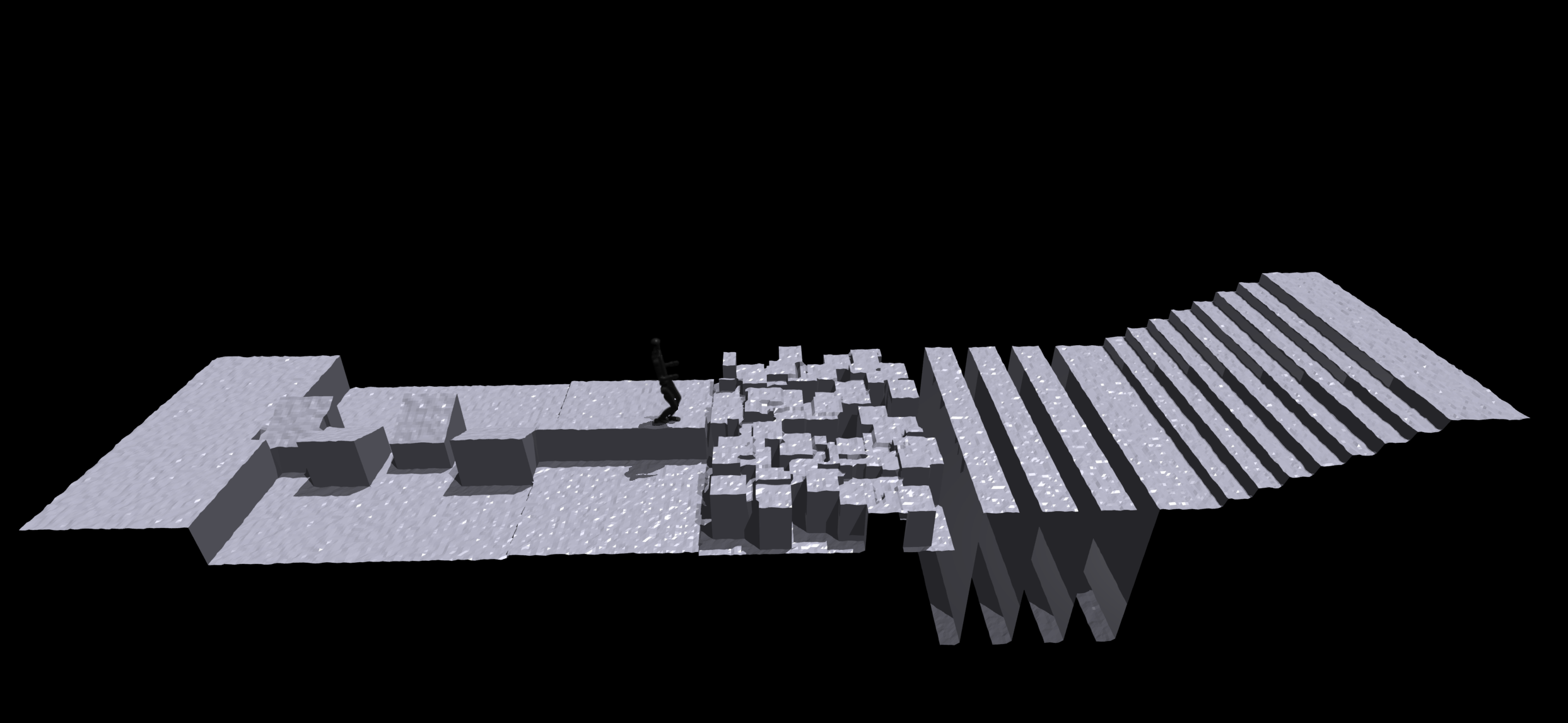

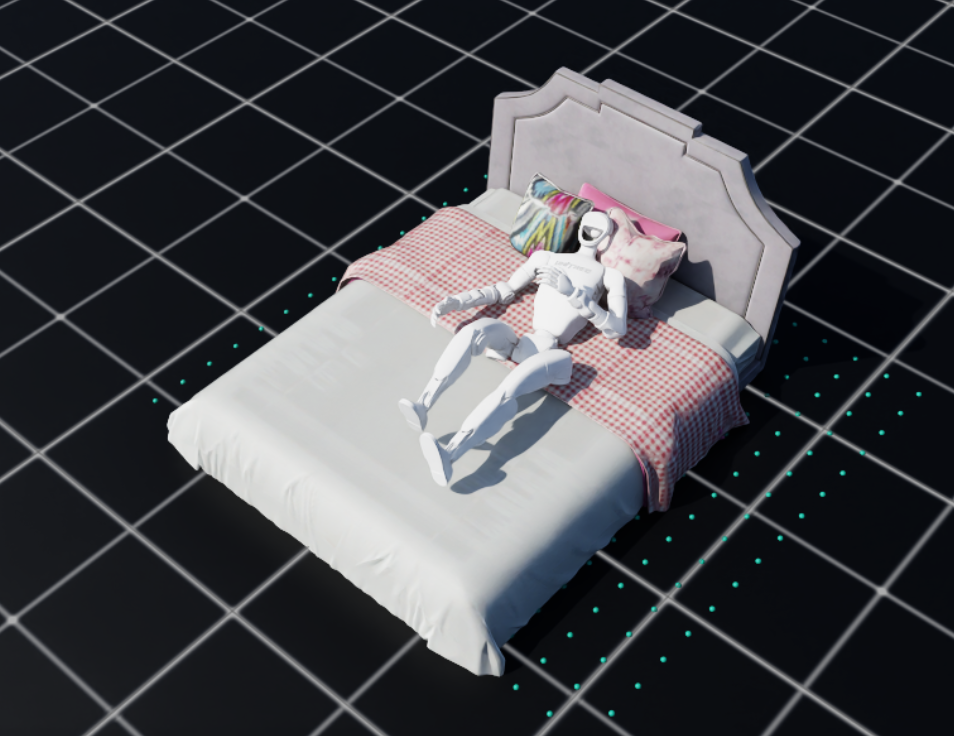

We are excited to announce the Multi-Terrain Humanoid Locomotion Challenge and Humanoid-Object Interaction Challenge, which will be held in conjunction with the workshop. The challenge aims to foster advancements in humanoid-scene interaction by providing a platform for researchers to showcase their work on embodied agents in dynamic environments. For more details, please visit the challenge websites.

🏆 Awards: 🥇 First Prize ($1000) 🥈 Second Prize ($500) 🥉 Third Prize ($300)

🏆 Awards: 🥇 First Prize ($1000) 🥈 Second Prize ($500) 🥉 Third Prize ($300)

| Time | Activity | Details |

|---|---|---|

| 13:30 - 13:40 | Welcome & Introduction | Host: Jingya Wang |

| 13:40 - 14:10 | Invited talk 1: Visual Embodied Planning | Speaker: Roozbeh Mottaghi |

| 14:10 - 14:40 | Invited talk 2: Perceiving Humans and Interactions at Affordable Cost Abstract: Understanding human behaviours requires information from not only the humans themselves, but also holistic information of the surrounding environment. Embedding such perception ability into robots with affordance cost is important to allow embodied AI to help every household. In this talk, I will discuss our recent works to perceive humans and their interactions with the environment in a cost-effective way. For dynamic human object interactions, we propose procedural interaction generation which allows scaling up interaction data for training interaction reconstruction models that generalizes to in the wild images and videos captured by mobile phones. I will then discuss our method PhySIC, an efficient optimization approach that reconstruct human, scene, and importantly physically plausible contacts from single image. I will also present Human3R which reconstructs everyone everywhere at 15fps with single GPU. | Speaker: Xianghui Xie |

| 14:40 - 15:10 | Oral Presentations |

|

| 15:10 - 15:40 | Coffee Break & Poster Session | |

| 15:40 - 16:10 | Invited talk 3: Memory as a model of the world | Speaker: Mahi Shafiullah |

| 16:10 - 16:40 | Invited talk 4: Towards powerful and efficient VLA models | Speaker: Hang Zhao |

| 16:40 - 17:10 | Invited talk 5: Planning and Inverse Planning with Neuro-Symbolic Concepts for Human-Robot-Scene Interaction | Speaker: Jiayuan Mao |

| 17:10 - 17:30 | Challenge award ceremony and Concluding remarks | Host: Yuexin Ma |

listed alphabetically